Fair representation learning with fine-grained adversarial regulation

of bias flow

Improve fairness in deep learningbased

systems

Societal biases and stereotypes are resonated in various deep learning (DL) / natural language processing (NLP) models and applications, among which contextualized word embeddings, and text classification.

Project Details

Young Career Project

Project Leader

Navid Rekabsaz

Call

10/2021

The current paradigm to mitigate such biases approach it by adding fairness criteria to the optimization of the model, resulting in a new model in a fixed state of fairness-utility tradeoff. In the FAIRFLOW project, we pursue a fundamentally different approach to bias mitigation in DL/NLP models. We introduce the novel bias regulation networks, which exploit adversarial training to provide fine-grained control of biases in the information flow of the main network. These regulation networks are stand-alone extensions, integrated into the main network's architecture.

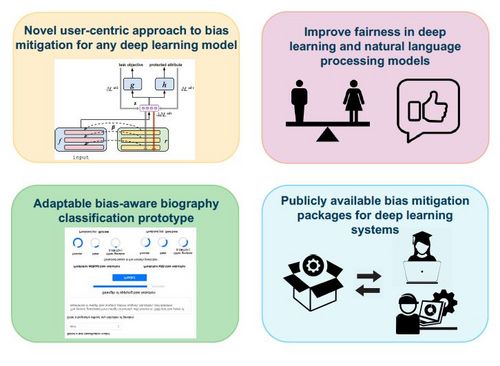

This novel paradigm will provide extensive flexibility to end-users at runtime (in contrast to the current paradigm), will expectedly lead to better bias mitigation results, and will enable the simultaneous mitigation of several biases in respect to different protected attributes, i.e., gender, race, and age. In the FAIRFLOW project, we will study the effectiveness of utilizing this approach on (1) various contextualized word embeddings, and (2) down-stream text classification tasks, and will compare the results with strong recent baselines. Beside basic research, we will showcase the benefits of FAIRFLOW by implementing a prototype of an adaptable bias-aware biography classifier, and will release packages for convenient adoption of the bias mitigation solution. The FAIRFLOW project aims to benefit society by providing bias-free DL solutions, and is particularly in line with the gender-equality Sustainable Development Goal of the United Nation.

DI Dr. Navid Rekabsaz, BSc.

DI Dr. Navid Rekabsaz, BSc. is assistant professor at the Institute of Computational Perception. Prior to it, he was a post doctoral researcher at Idiap Research Institute (affiliated with EPFL), and a PhD candidate at TU Wien. He explores deep learning methods in natural language processing and information retrieval, with a focus on fairness and algorithmic bias mitigation.

Go to JKU Homepage

Go to JKU Homepage